ID: PMRREP34380| 265 Pages | 9 Feb 2026 | Format: PDF, Excel, PPT* | Semiconductor Electronics

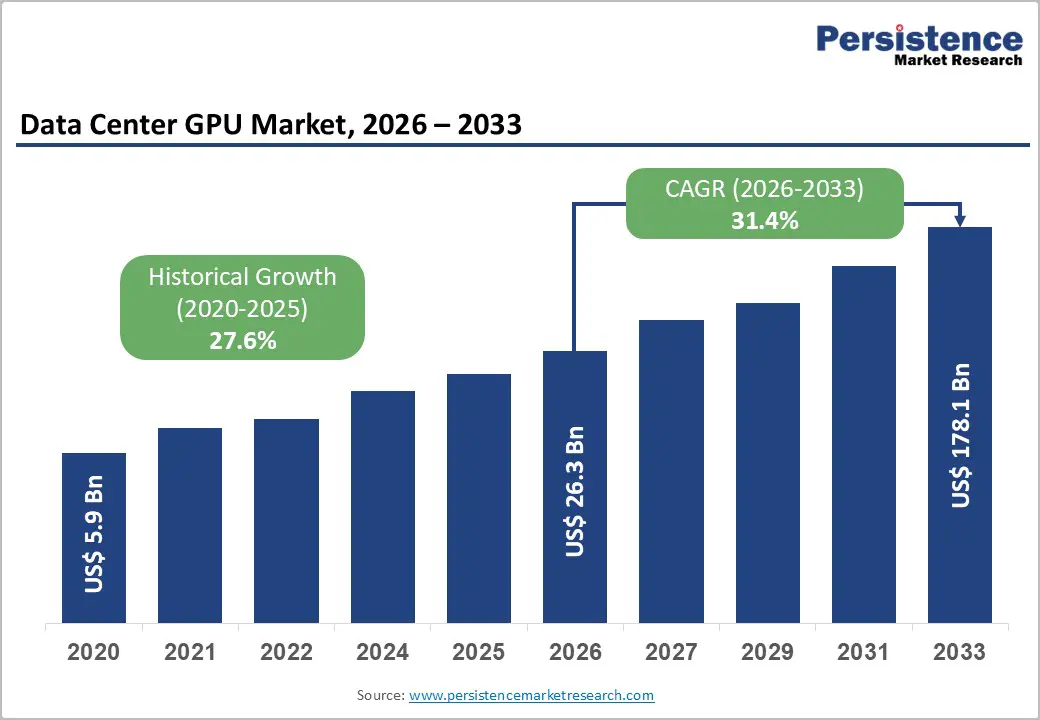

The global data center GPU market size is projected to rise from US$26.3 billion in 2026 to US$178.1 billion by 2033 growing at a CAGR of 31.4% during the forecast period from 2026 to 2033, driven by the explosive demand for large language model (LLM) training and deployment, enterprise-wide adoption of artificial intelligence and machine learning workloads, and the structural shift toward cloud-based GPU infrastructure as organizations prioritize operational flexibility over capital expenditure.

The transition from GPU scarcity to managed supply is enabling organizations to move from experimental AI pilots to production-grade deployments, anchoring the market's trajectory toward a near-tripling of value over the forecast period.

| Key Insights | Details |

|---|---|

| Data Center GPU Market Size (2026E) | US$26.3 Bn |

| Market Value Forecast (2033F) | US$178.1 Bn |

| Projected Growth (CAGR 2026 to 2033) | 31.4% |

| Historical Market Growth (CAGR 2020 to 2025) | 27.6% |

The rapid scaling of large language models and AI applications has created computational demands that CPUs alone cannot meet, prompting enterprises and hyperscalers to adopt GPU clusters at scale. Leading technology firms are investing heavily in GPU capacity, for example, Meta deployed over 24,000 NVIDIA H100 GPUs for training and inference of Llama models. GPU acceleration shortens model training from months to weeks, enabling faster innovation cycles and competitive advantage. As AI moves from experimentation to production across sectors like finance, healthcare, and manufacturing, sustained GPU procurement becomes a core infrastructure priority.

Cloud Service Providers (CSPs) such as AWS, Microsoft Azure, and Google Cloud are investing heavily in GPU-enabled infrastructure to meet surging enterprise demand for AI computing without requiring large upfront hardware investments. By launching advanced GPU instances like AWS’s P6e-GB300 UltraServers (NVIDIA B200 Blackwell) and Google Cloud’s immediate availability of NVIDIA B200/GB200 Blackwell GPUs plus Ironwood TPUs, CSPs are rapidly modernizing their data centers to support high-performance AI workloads. This shift is accelerating GPU adoption across industries, as enterprises increasingly rely on cloud-based GPU clusters. The pay-as-you-go pricing model has democratized access to AI infrastructure, enabling mid-market firms and startups to scale AI projects without heavy capital expenditure.

Modern GPU architectures such as NVIDIA Blackwell and AMD MI350 generate high thermal loads, often exceeding 1,000 watts per unit, significantly increasing data center power density and operational strain. A single H100 GPU rack requires approximately 44 kW for four nodes, well above conventional infrastructure standards and necessitating major upgrades to power distribution and electrical capacity. Cooling alone accounts for 40-50% of total energy consumption in AI data centers, making thermal management the primary bottleneck to performance scaling. Organizations in regions with limited grid capacity or access to renewable energy face severe constraints, widening the competitive gap between hyperscalers and mid-market enterprises.

GPU supply constraints continue to create artificial scarcity, with availability remaining tight and many organizations unable to deploy planned GPU capacity expansions on schedule. A highly concentrated supplier landscape, dominated by a single vendor, increases dependency risk and limits customer options, weakening bargaining power. The constrained environment allows manufacturers to maintain premium pricing, potentially slowing adoption among price-sensitive enterprises and public sector buyers. Advanced fabrication nodes required for next-generation GPUs are constrained by capacity at semiconductor foundries, further limiting supply. Geopolitical export controls and trade restrictions fragment the global market, lengthening procurement cycles and complicating planning for GPU-dependent deployments.

The convergence of autonomous vehicles, robotics, and real-time edge analytics is creating a new demand segment for inference-optimized GPUs deployed at the network edge rather than centralized data centers. Autonomous vehicle systems rely on GPU acceleration to process sensor data in real time with ultra-low latency, enabling rapid decision-making essential for safety-critical collision avoidance and automated driving. Major technology providers are integrating edge GPU platforms into production vehicles and industrial robotics, signaling a broader shift toward distributed intelligence. Edge GPU deployments are expanding across IoT and real-time analytics applications, where on-device inference reduces reliance on remote data centers and improves responsiveness. This transition to distributed inference infrastructure is expected to drive incremental GPU demand as organizations balance the efficiency of centralized training with the resilience and latency requirements of edge inference.

The U.S. Department of Defense and allied governments are treating AI infrastructure as a strategic security priority, awarding US$200 million contracts to leading AI firms Google, xAI, Anthropic, and OpenAI to build advanced military AI capabilities. The DoD’s FY2026 AI budget request of approximately US$13.4 billion, coupled with major infrastructure investments like NVIDIA’s Solstice system featuring 100,000 Blackwell GPUs, underscores the scale of government-led GPU capacity expansion. The Department of Energy’s plan to establish AI data centers at 16 federal locations by 2027 further strengthens long-term structural demand. These programs support defense use cases, including autonomous systems, real-time intelligence analysis, and supply chain optimization, and require continuous upgrades and long procurement cycles. Geopolitical competition, especially between the U.S. and China, accelerates AI capability development and ensures multi-year GPU procurement commitments.

Hardware dominates the global market, capturing more than 67% market share in 2026 with a value exceeding US$ 17.7 Bn, due to organizations facing an urgent need for raw compute capacity to support AI training, generative AI, high-performance computing, and large-scale inference workloads. GPUs are capex-intensive assets, and each AI cluster requires thousands of high-value accelerators along with supporting servers, networking, and power infrastructure. Software and services scale with usage, but every deployment is anchored by physical GPU purchases, making hardware the dominant cost component. Rapid model size growth and shorter technology refresh cycles are driving frequent hardware upgrades and expansion.

GPU software & frameworks exhibit the highest CAGR of 36.5%, as they are the essential layer that unlocks the full potential of GPU hardware. As AI and deep learning models become more complex, organizations need optimized libraries, compilers, and runtime environments to efficiently train and deploy these models at scale. Software frameworks help standardize workflows, reduce development time, and enable portability across different GPU architectures. With the growing demand for AI inference and real-time analytics, enterprises increasingly invest in software tools that improve the performance, manageability, and security of GPU workloads.

On-premises hold over 56% market share in 2026, with a value exceeding US$ 14.8 Bn as organizations need direct control over performance, data, and infrastructure. GPU-intensive workloads such as AI model training, simulation, and real-time analytics require predictable low latency and dedicated compute, which on-premises environments provide better than shared cloud resources. High and sustained GPU utilization makes long-term on-premises capital expenditures more cost-effective than recurring cloud GPU costs for large-scale workloads.

Cloud-based is expected to grow at the highest rate, with a CAGR of 37.8%, due to organizations increasingly shifting workloads to the cloud to avoid high upfront hardware costs and long procurement cycles. Cloud platforms provide flexible, on-demand GPU capacity that can scale instantly for training and inference needs, especially during peak compute periods. They also support multi-tenant environments, enabling businesses to run GPU-intensive applications without managing complex infrastructure or cooling requirements. It facilitates access to the latest GPU architectures and tools, allowing companies to innovate more quickly and reduce time-to-market.

Inference holds over 53% market share in 2026, with a value exceeding US$ 14 Bn due to organizations deploying AI models at scale for real-time decision-making rather than just training them. Business-critical applications such as search, recommendations, fraud detection, conversational AI, and video analytics require continuous, low-latency inference across millions of daily requests. Inference workloads operate continuously, driving higher cumulative GPU consumption and infrastructure costs. The rapid adoption of generative AI in customer-facing and operational workflows further amplifies demand for inference-optimized GPUs within data centers.

Training is expected to grow at a significant rate, with a CAGR of 32.6%, as organizations increasingly build and fine-tune large, domain-specific AI models rather than relying solely on pre-trained models. The surge in generative AI, multimodal models, and agentic systems requires massive parallel compute, high-bandwidth memory, and fast interconnects that only data center GPUs efficiently deliver. Enterprises also require continuous retraining to maintain model accuracy, compliance, and alignment with evolving data, regulations, and user behavior.

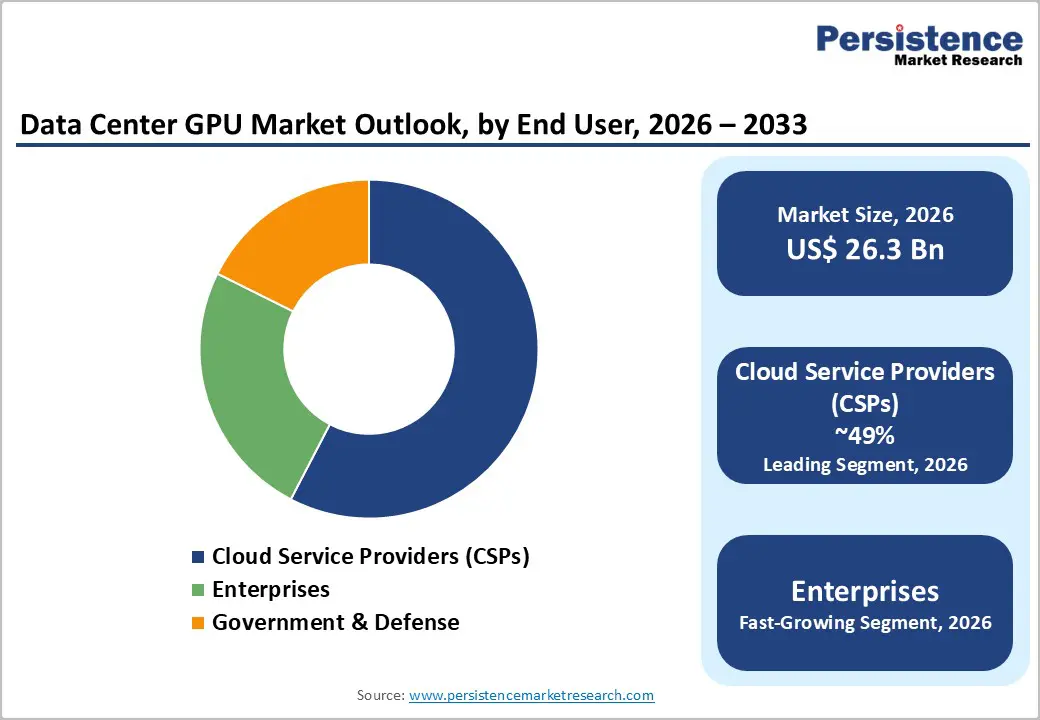

Cloud service providers (CSPs) hold the largest market share, exceeding 49% in 2026, with a value of over US$ 12.9 Bn, as they face the most acute need for massive, elastic compute to support AI training, inference, and data-intensive workloads on a global scale. Their business models depend on rapidly provisioning GPU capacity across regions to serve thousands of enterprises. CSPs also require high GPU utilization rates to maintain margins, driving continuous investment in the latest, most powerful accelerators. The growing demand for AI-as-a-service, foundation models, and real-time analytics is forcing CSPs to procure and deploy GPUs more quickly and at higher volumes.

Enterprises are expected to grow at a CAGR of 33.4% as their computing needs are shifting from general-purpose workloads to AI-intensive and data-heavy applications. Data sovereignty, latency control, and security requirements are also pushing enterprises to deploy GPUs within private or hybrid data centers rather than relying entirely on public cloud resources. Enterprises seek predictable long-term cost structures and tighter integration with existing IT and operational systems, which accelerates direct GPU investments.

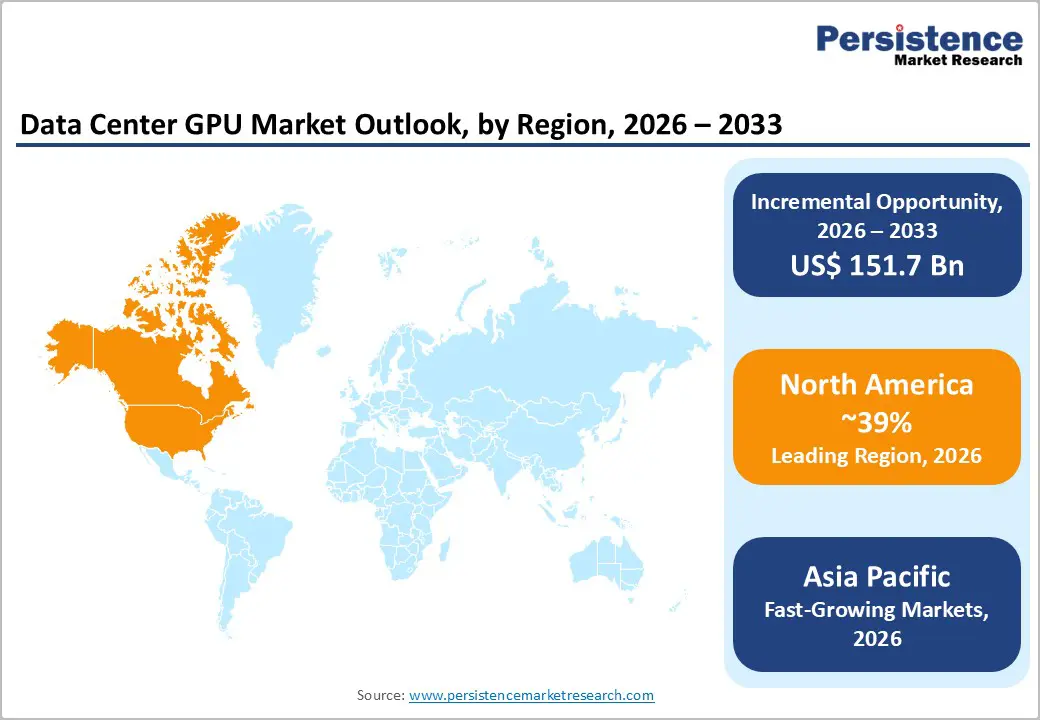

North America holds over 39% share in 2026, reaching US$ 10.3 Bn value, driven by concentrated hyperscaler infrastructure and strong enterprise AI adoption. Major cloud providers continue to expand GPU offerings and secure high-performance compute capacity through strategic partnerships, intensifying competition for enterprise workload migration. The region also benefits from significant government and defense spending, which sustains procurement demand for advanced GPU systems. This environment has accelerated large-scale GPU deployments in hyperscale data centers to support production AI workloads.

Asia Pacific is expected to achieve the highest CAGR in the forecast period. Major cloud providers in China are consolidating GPU infrastructure to support AI services across e-commerce, gaming, and enterprise applications. India is emerging as a high-growth market as enterprises in BPO, financial services, and IT consulting integrate GPU acceleration into their platforms. Japan, South Korea, and Southeast Asian nations are building GPU-dense research facilities for AI model development and semiconductor design. The region benefits from manufacturing economics, allowing cloud providers to offer GPU computing at a cost advantage compared to other regions. Expansion is further supported by the deployment of 5G and fiber networks, which enable low-latency edge computing for real-time AI services.

Europe is expected to hold more than 22% share by 2026. Germany, France, the UK, and Spain are leading the region in deploying GPU clusters for AI training, simulation, and large language model development. Automotive manufacturers in Germany are integrating GPU infrastructure to accelerate autonomous-vehicle AI workflows, whereas France and the UK are developing research clusters supported by government AI investment initiatives. Operators are prioritizing energy-efficient GPU deployments that integrate direct liquid cooling and renewable energy to meet stringent EU sustainability and carbon-neutrality mandates. The region faces structural constraints, including limited renewable energy availability in some areas and stricter energy-efficiency regulations than in other regions.

The data center GPU market is highly consolidated, dominated by a few major players with strong IP portfolios, advanced GPU architectures, and extensive ecosystems. Manufacturers compete through continuous innovation in performance-per-watt, delivering specialized GPUs for AI training and inference, while aggressively optimizing memory bandwidth and interconnect technologies. They also focus on strategic partnerships with hyperscalers, cloud providers, and OEMs, enabling deep integration into data center stacks and exclusive supply agreements.

The global big data center GPU market is projected to be valued at US$26.3 Bn in 2026.

The growing need for high-performance computing to support AI, real-time analytics, and large-scale virtualization workloads that CPUs alone cannot efficiently handle, is a key driver of the market.

The market is expected to witness a CAGR of 31.4% from 2026 to 2033.

Expansion of edge and hybrid cloud deployments & advancements in GPU software ecosystems and custom AI accelerators is creating strong growth opportunities.

NVIDIA Corporation, Advanced Micro Devices, Inc., Intel Corporation, Google LLC, Microsoft Corporation, Amazon Web Services, Inc Alibaba Cloud are among the leading key players.

| Report Attribute | Details |

|---|---|

| Historical Data/Actuals | 2020 - 2025 |

| Forecast Period | 2026 - 2033 |

| Market Analysis Units | Value: US$ Bn/Mn, Volume: As Applicable |

| Geographical Coverage |

|

| Segmental Coverage |

|

| Competitive Analysis |

|

| Report Highlights |

|

By Offering

By Deployment

By Function

By End-user

By Region

Delivery Timelines

For more information on this report and its delivery timelines please get in touch with our sales team.

About Author